Code is Not Thought

If a machine can mimic a thought, does it mean it is thinking? This dialogue strips away the hype of modern AI to reveal the "Code That Could Never Think." By examining the structural limitations of computation, we explore the difference between a system that follows rules and a system that generates meaning. It’s a provocative look at why our current path in AI is not a bridge to intelligence, but a detour away from it.

Dr. William Compute – CTO of a Fortune 100 company

Dr. Marcus Doubtful – physicist turned philosopher

William: Marcus, with all this talk about Artificial Intelligence, I’ve been rethinking what computers can actually achieve.

Marcus: That’s unexpected coming from you. Especially now — AI is advancing in every field. You can’t deny how useful these systems have become. So what exactly are you skeptical about?

William: Their intelligence. I don’t deny their usefulness — that’s my job. I build these systems every day. What I question is the claim that we’re anywhere close to actual intelligence, in the sense of understanding, reasoning, or independent thought.

Marcus: Interesting. Go on.

William: Traditional programming is literal. Machines do exactly what we tell them. Miss a semicolon? The program crashes. Forget an input case? It fails. There’s no intelligence, no comprehension — I have to specify every step. If I want something new, I rewrite everything from scratch.

Marcus: Fair enough. But what about modern AI? You’ve seen the breakthroughs in machine learning — language models, image recognition, even creative writing. It’s hard not to get excited about the progress and the usefulness.

William: That’s what frustrates me most. AI feels different — we don’t program it step by step — but we still have to train it on massive datasets for each new problem. And half the time I’m not even sure if it’s just spitting out something that sounds right. At least with traditional code, I know what it will do.

Marcus: Let me play devil’s advocate. Any problem we can define and break into logical steps can, in principle, be solved by computers. Isn’t that limitless?

William: But that’s the point! Someone still has to define and break down the problem. Whether I’m coding directly, curating datasets, or designing algorithms — there’s always a human doing the actual thinking. The machine is just fast at executing instructions.

Marcus: And you see that as a problem?

William: I see it as a limit. Computers are tools, nothing more. Even the most advanced AI just augments human intelligence. It’s like Excel for an accountant or a car for a traveler: useful, but not transformative. The human still frames the task, checks the results, and decides what matters.

Marcus: So computers give us mental mobility — a speed boost — but not true intelligence.

William: And they’re amazing. We train them on mountains of data and define what counts as success. The computer isn’t deciding anything for itself — we are.

Marcus: But we’re making progress. Look at chess, image recognition, translation, medical diagnostics. Tasks that once required human thought are now automated. Why not believe intelligence is just a matter of more power and better algorithms? Some argue that the brain is just a biological computer. If we simulate enough neurons with enough complexity — quantum or otherwise — won’t intelligence emerge?

William: But even that requires human decisions: Do we simulate atoms, proteins, neurons? At what scale? What counts as success? In the end, we choose the architecture and objectives. The intelligence is borrowed from the designers.

Marcus: Suppose we could build a system that learns to frame its own problems. Wouldn’t that solve it?

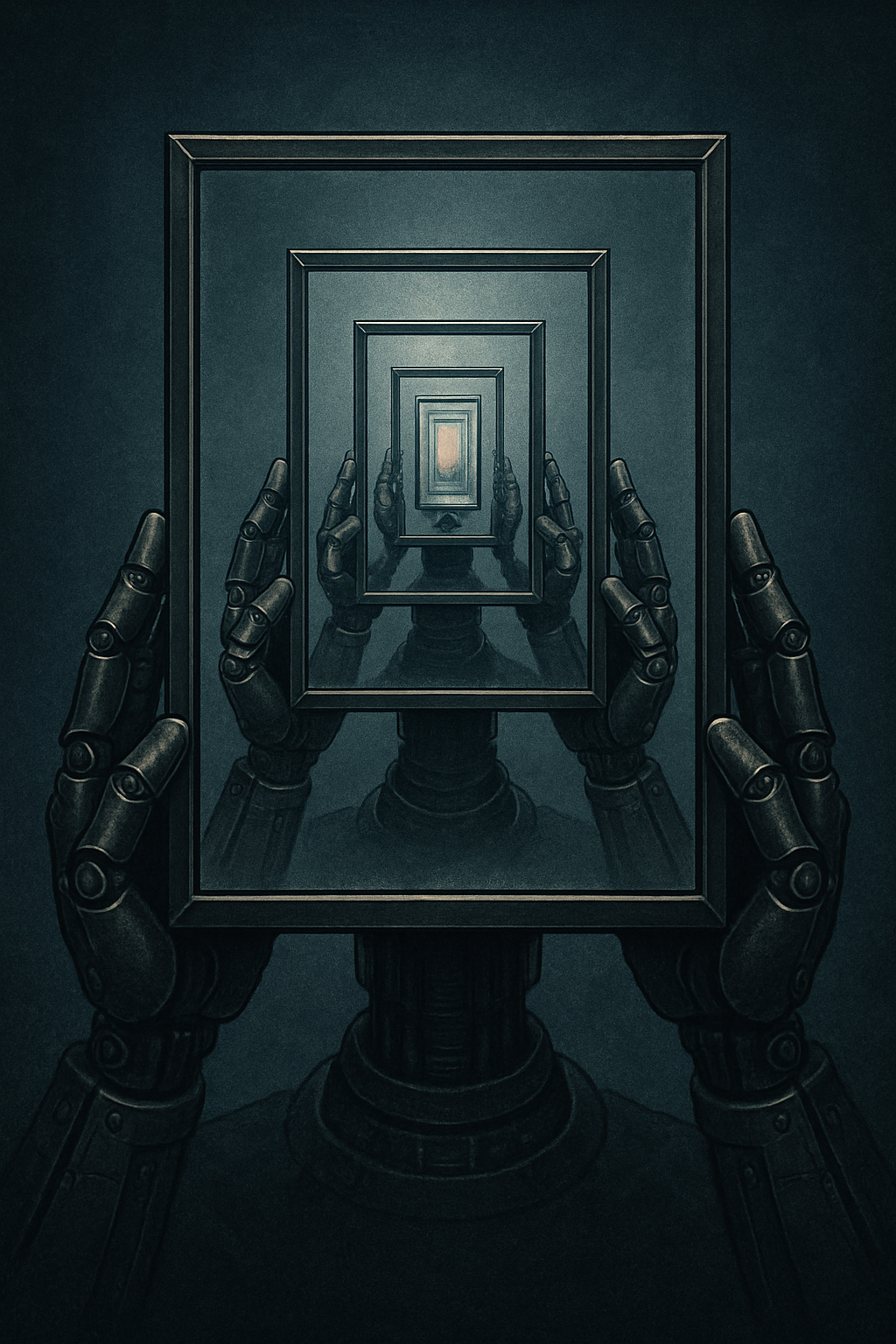

William: Who frames the meta-problem of learning to frame problems? If we build a program to set goals for another program, who sets its goals?

Marcus: Is this what philosophers call the “frame problem”?

William: In some sense, yes! A model can’t evaluate the actions it generates without some external frame — a standard for what counts as good, bad, success, failure. And that standard always comes from us. Even if you try to build a model that evaluates its own goals, you’ve just shifted the framing problem up a level: now that evaluator needs an evaluator. It’s infinite regress. There’s always a human somewhere in the loop.

Marcus: Evolution, then. No designer framed goals for life — selective pressures shaped intelligence over time. Couldn’t we replicate that?

William: Only if we decide what selective pressures to simulate, what environment to model, how to measure “fitness.” Even artificial evolution relies on human framing.

Marcus: So you’re saying the real challenge isn’t building smarter algorithms — it’s escaping this framing trap altogether.

William: Exactly. True intelligence would require defining its own problems and purposes. And I see no way to achieve that with current paradigms. Every model we make presupposes an evaluator outside the model. To judge the model, you need another model — and so on, forever.

Marcus: Which makes the framing problem unsolvable within the traditional approach.

William: Exactly. And that’s why I feel stuck.

Marcus: Or maybe not stuck — just standing at the edge of a different way of thinking. There’s an approach I’ve been exploring. We call it Geneosophy.

William: Geneosophy? Sounds mystical.

Marcus: It isn’t mystical — it’s about recognizing that intelligence can’t be imposed from the outside. It has to generate its own frame — its own standards of meaning — without being told what to optimize.

William: So you’re not talking about better algorithms. You’re talking about a system that learns to ask why before it ever answers how.

Marcus: Exactly. A system that can ask both why and how. Not patching the framing trap — dissolving it.

William: And if that’s possible… it wouldn’t just be another step forward for AI. It would be something else entirely.

Marcus: A mind not explicitly designed — only discovered.

William: Then maybe we’re not building machines anymore in the traditional sense. We’re evaluating… whatever comes next.

Marcus: Precisely. But that’s a conversation for another night.

(Silence.)